The future of (open) human neuroscience

Stanford University

Why have I spent the last decade of my career trying to make neuroscience more open and reproducible?

My first faculty position: MGH-NMR Center, 1999

- Median sample size for neuroimaging studies in 1999 was N = 8 (David et al.)

- Poldrack RA et al. (1998), Cerebral Cortex

- N = 6

- Poldrack RA et al.(1999), Neuropsychology

- N = 8

- Poldrack RA et al (1999), NeuroImage

- N = 8

- Klingberg T, …, Poldrack RA (2000), Neuron

- N = 6 poor readers, 11 controls

- Temple E, Poldrack RA et al. (2000), PNAS

- N = 8 dyslexics, 10 controls

- Poldrack RA et al. (1998), Cerebral Cortex

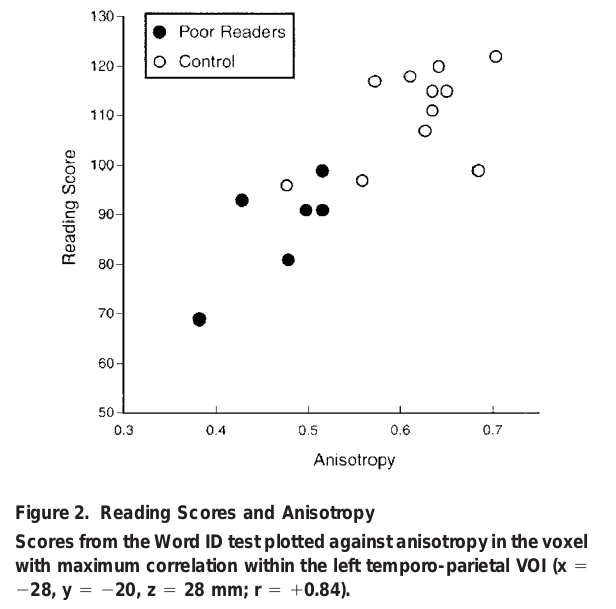

Small-sample correlations

Klingberg et al, 2000, Neuron

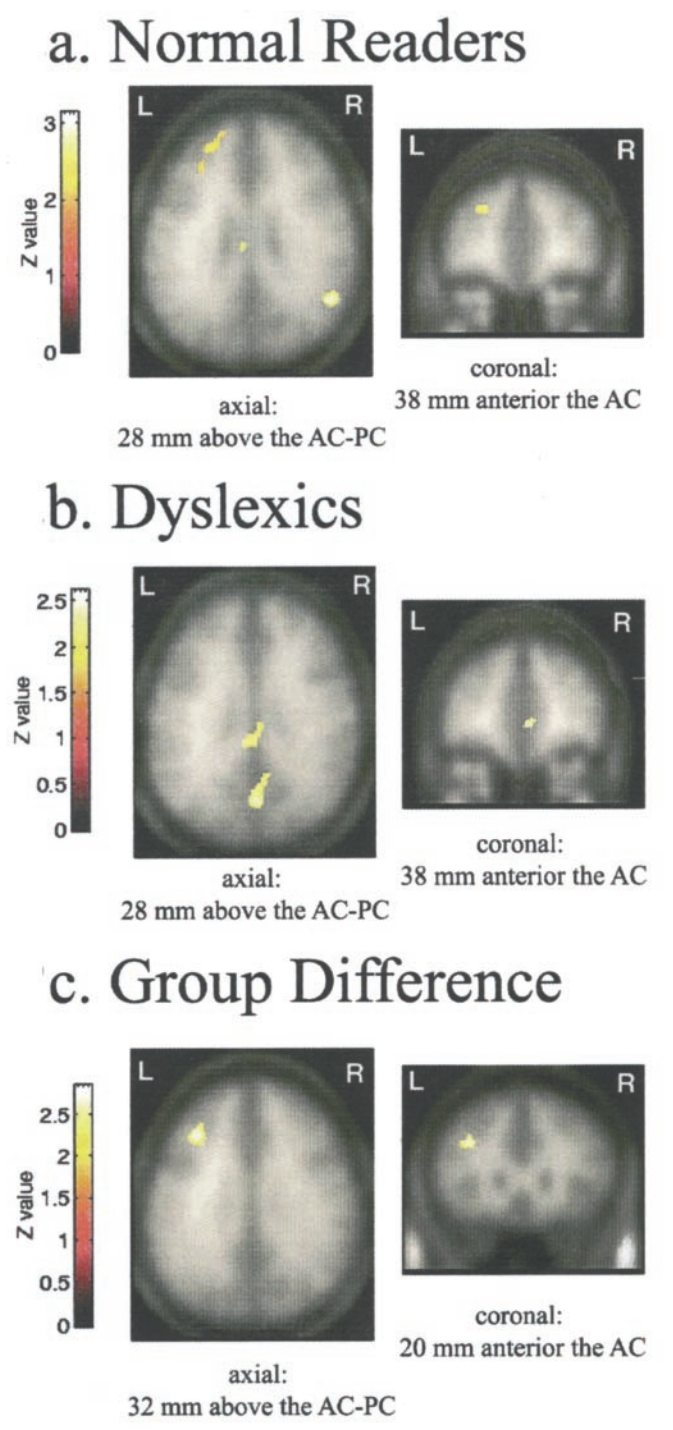

Small-sample group comparisons

- Temple, Poldrack et al., 2000, PNAS

- N = 8 dyslexics, 10 controls

- “Voxels were considered statistically significant at an uncorrected P < 0.005.”

- Figure caption: “fMRI response to rapid auditory stimuli in normal and dyslexic readers (P < 0.025).”

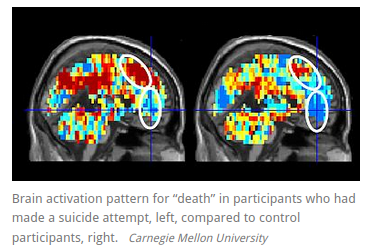

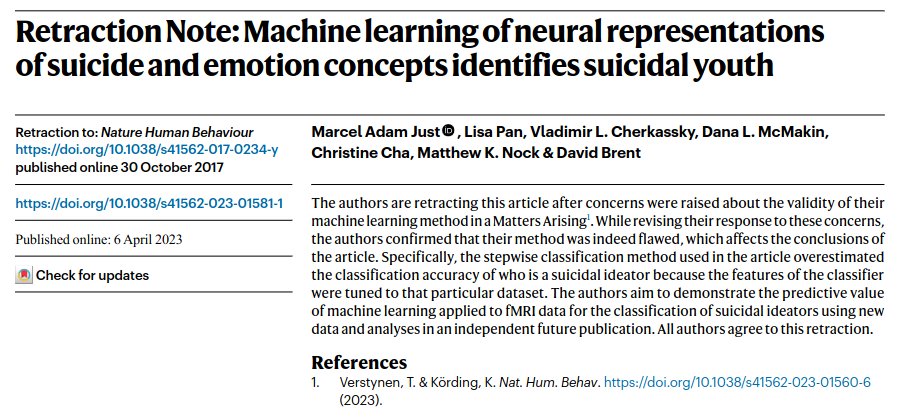

Questionable research practices in neuroimaging led to a series of credibility crises

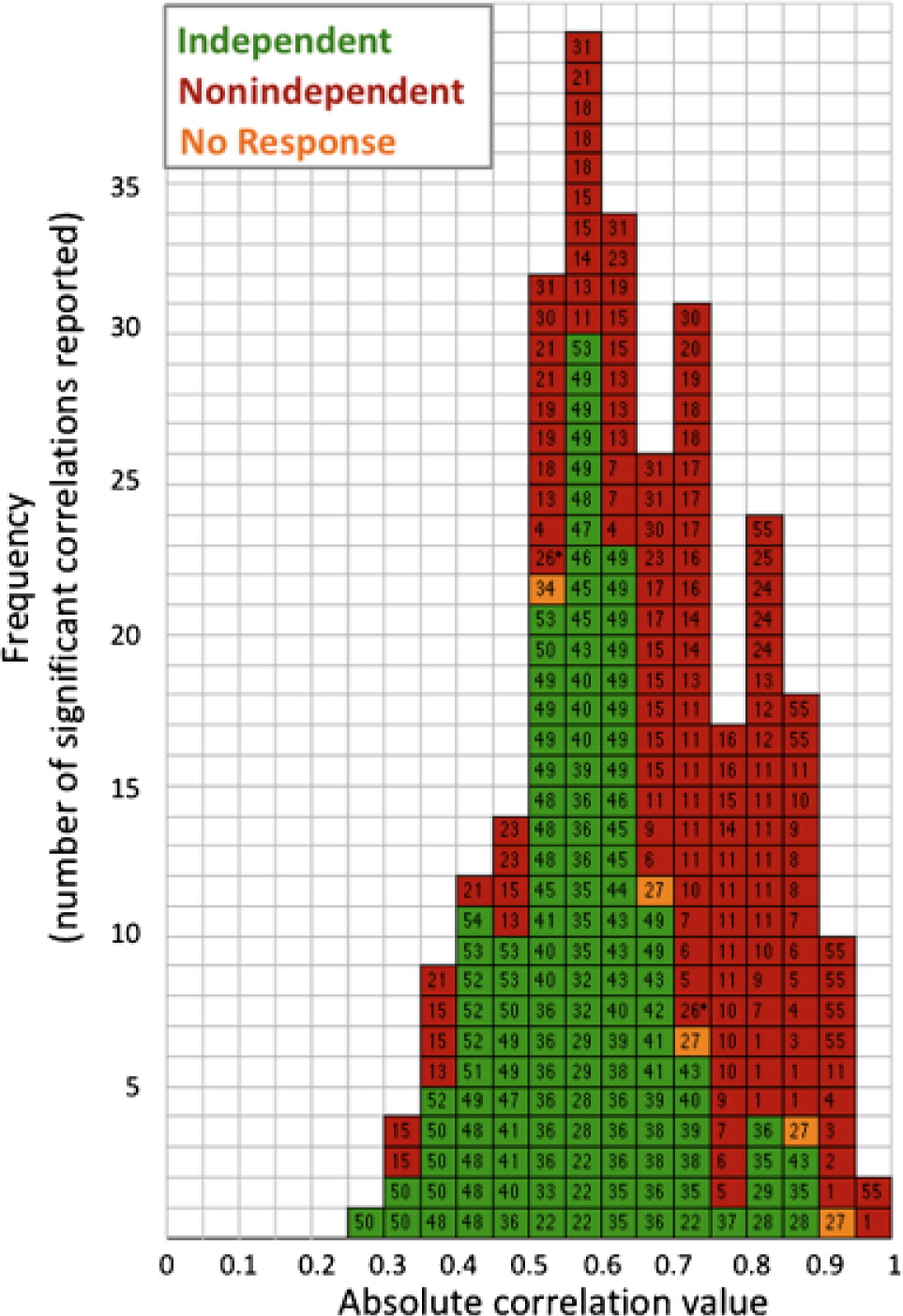

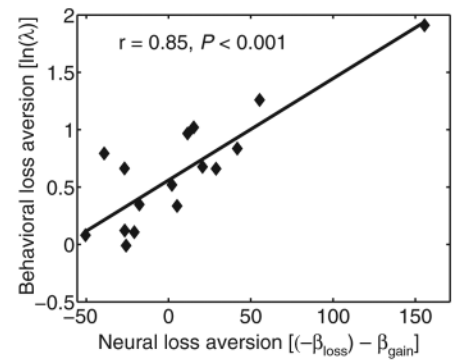

2008: Voodoo correlations and circularity

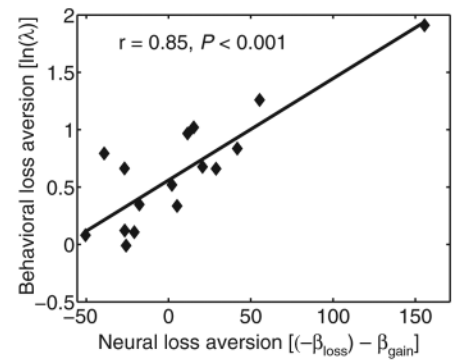

Voodoo correlations in our work

Tom et al, 2007, Science

Voodoo correlations in our work

Tom et al, 2007, Science

Poldrack & Mumford, 2009, SCAN

2022: Circularity 2.0: Machine learning edition

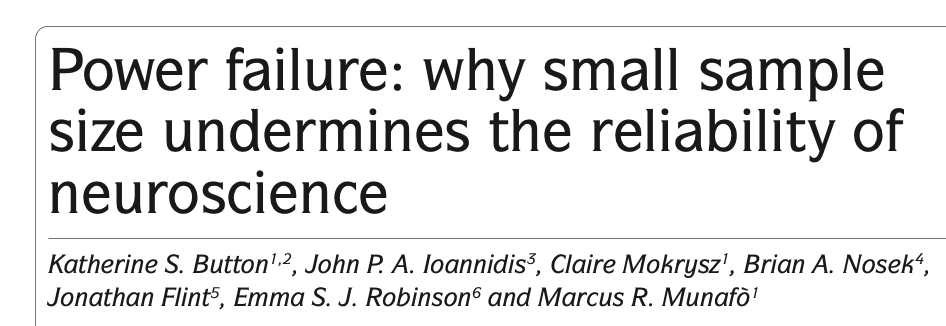

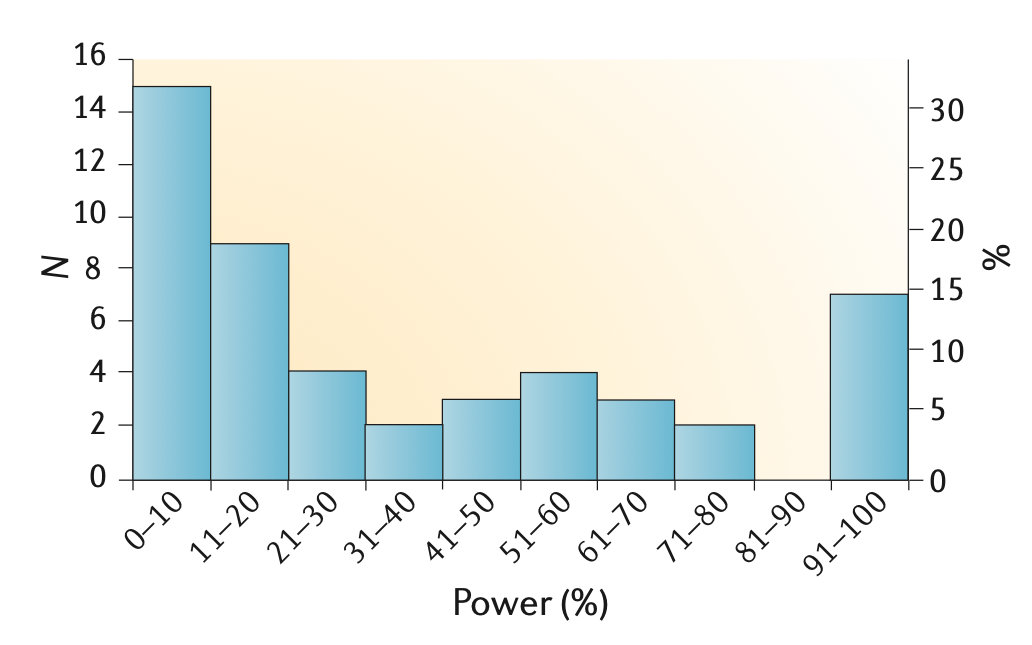

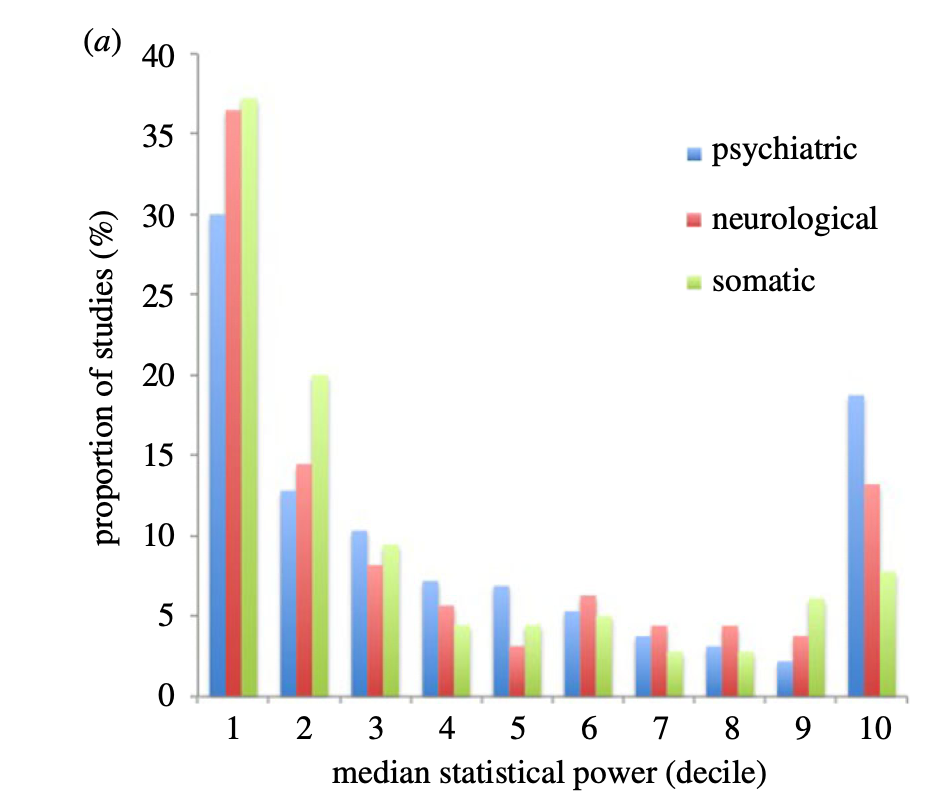

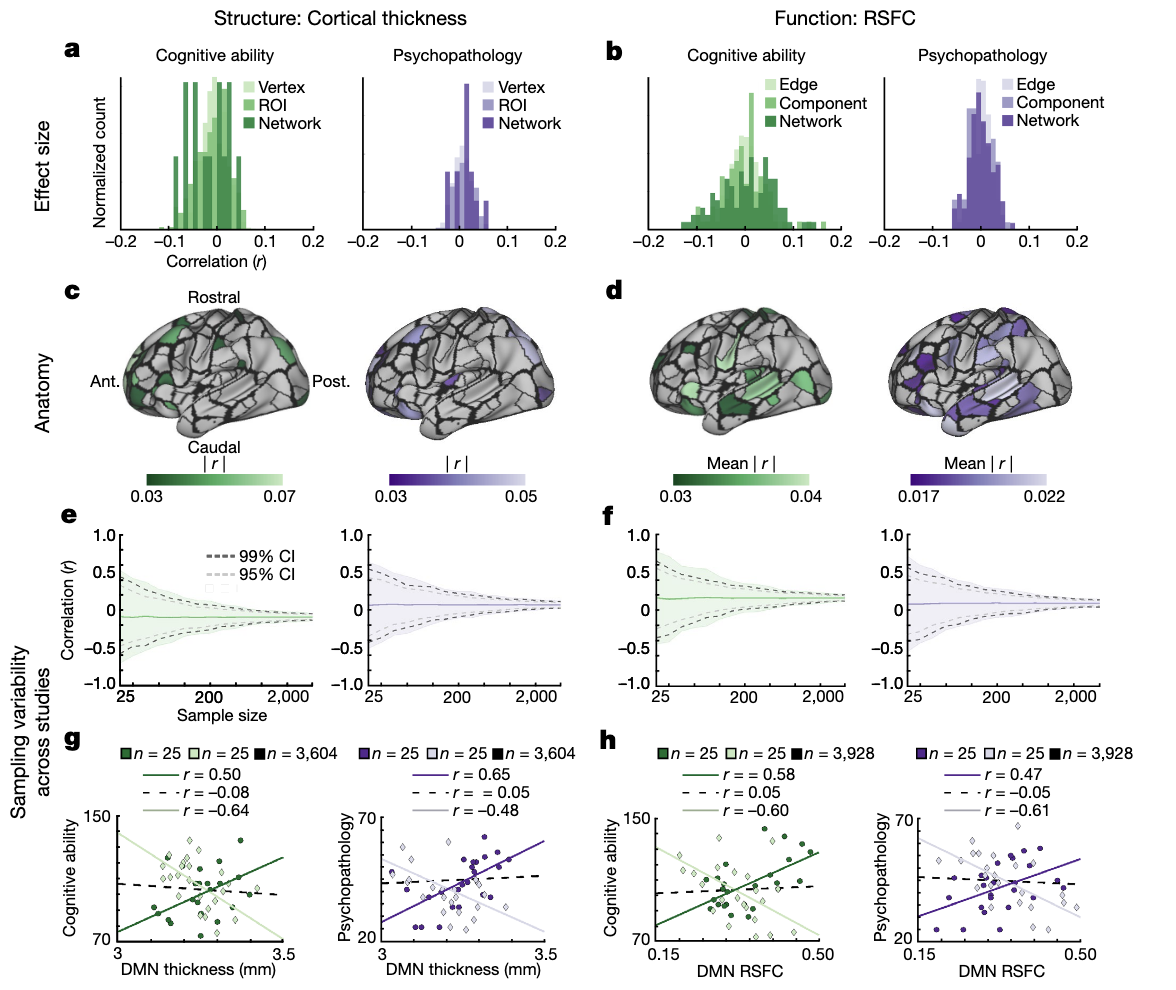

2013: Power failure

Low power -> unreliable science

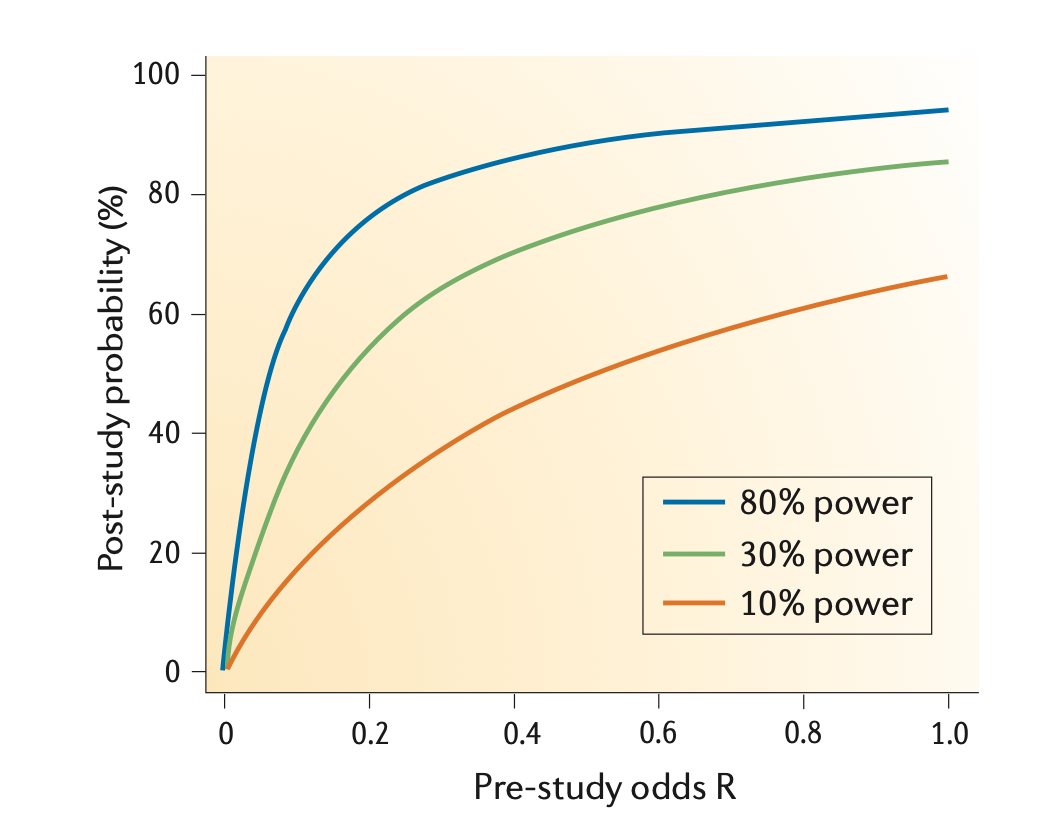

Positive Predictive Value (PPV): The probability that a positive result is true

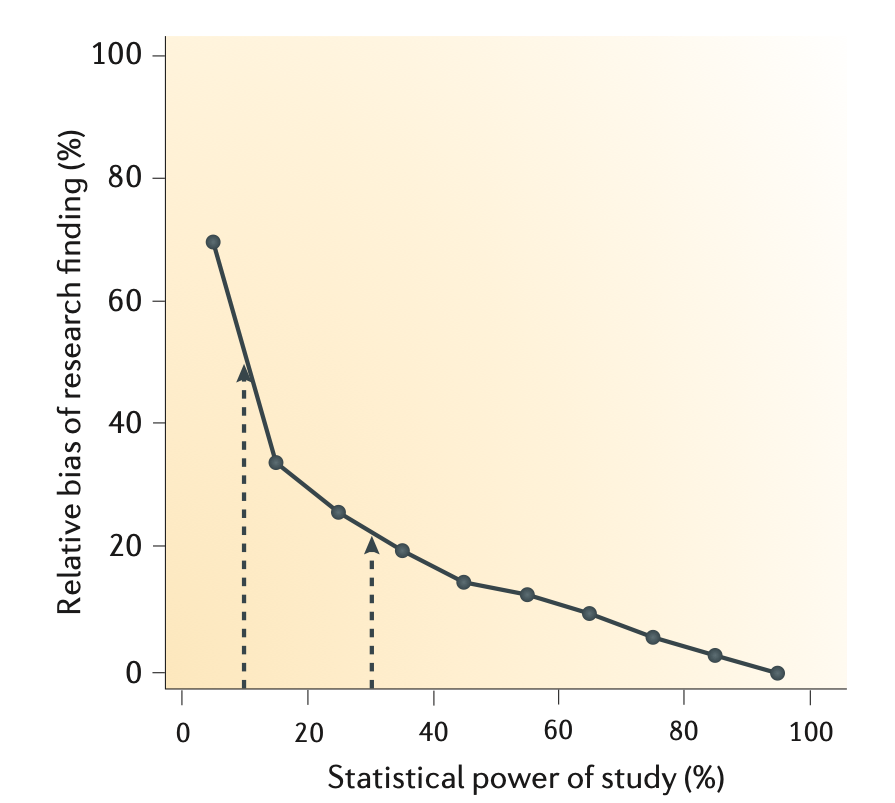

Winner’s Curse: overestimation of effect sizes for significant results

Button et al, 2013

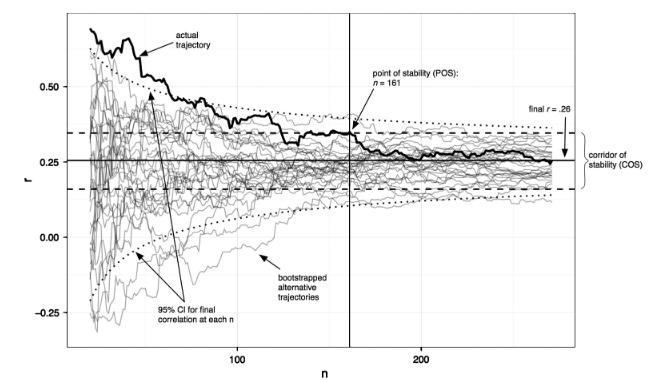

Small samples = high instability of statistical estimates

Schonbrodt & Perugini, 2013

Marek et al., 2022

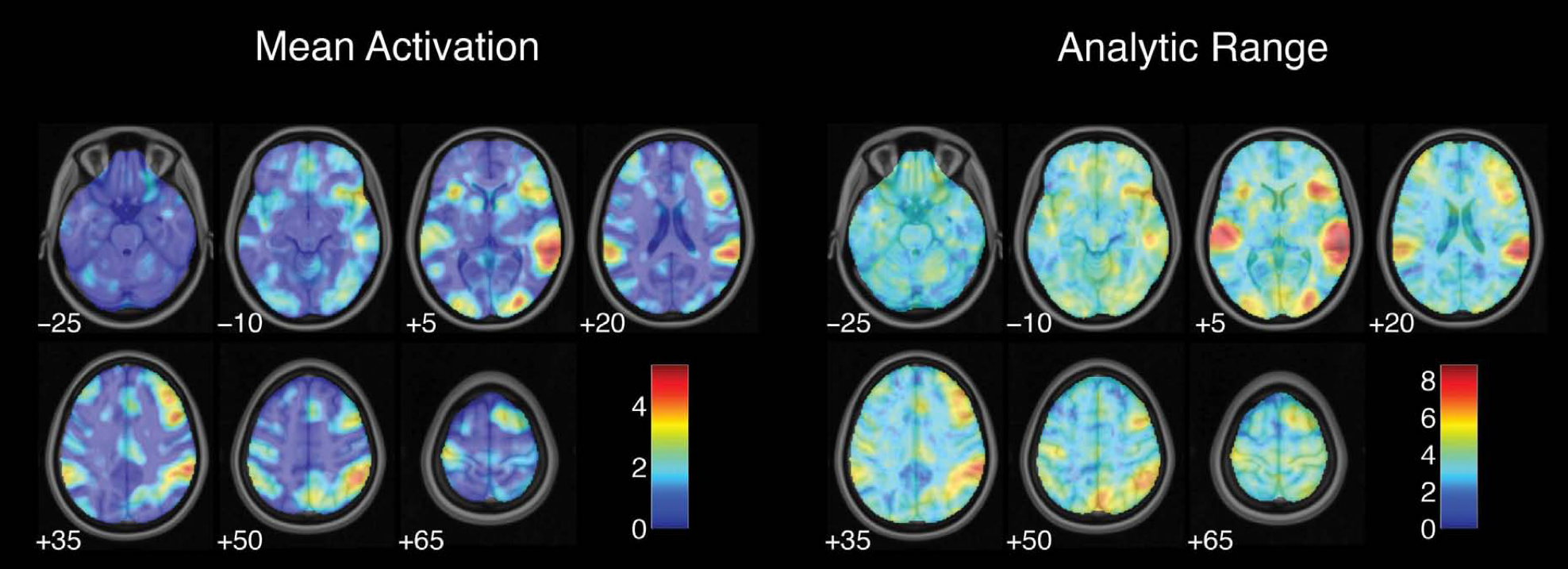

2012: Effects of analytic variability

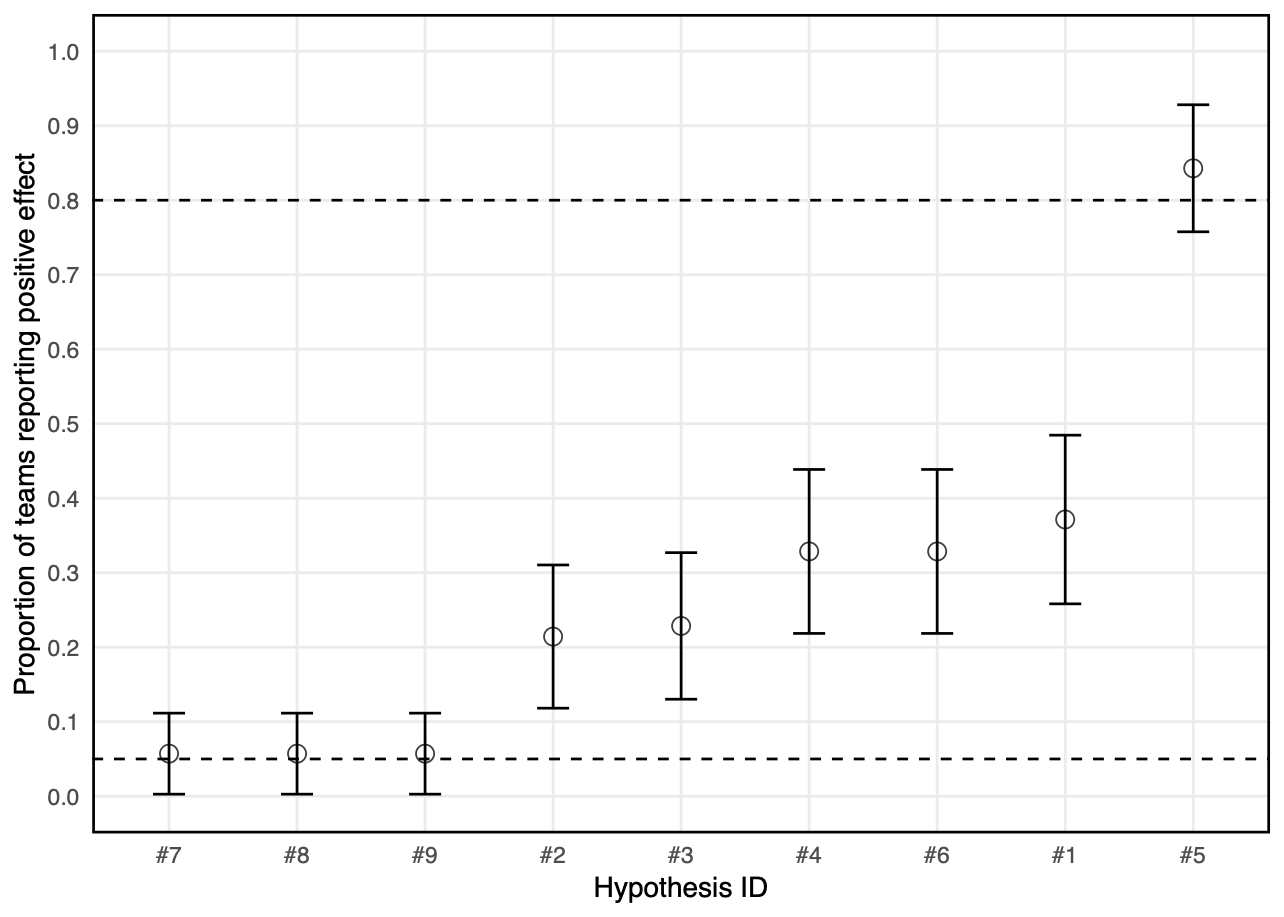

What is the effect of analytic variability in the wild?

- 70 teams tested 9 hypotheses using the same dataset

- No 2 team used the same workflow

- Across teams there were 33 different patterns of outcomes

- For any hypothesis, there are at least 4 workflows that can give a positive result

Botvinik-Nezer et al., 2020, Nature

These crises have inspired a credibility revolution in neuroimaging over the last decade, strongly focused on open science practices.

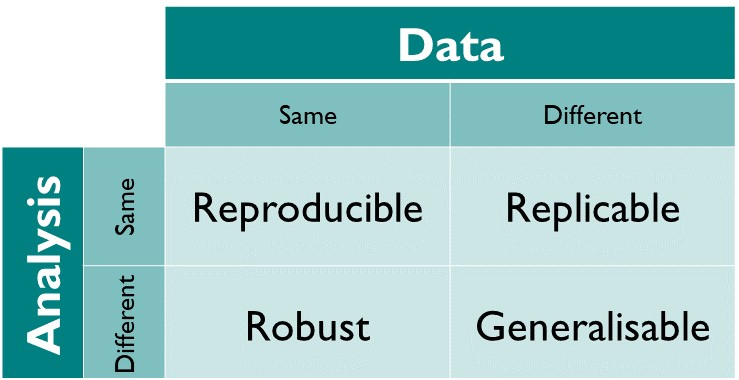

Transparency is essential for reproducibility

“we can distill Claerbout’s insight into a slogan:

An article about computational science in a scientific publication is not the scholarship itself, it is merely advertising of the scholarship. The actual scholarship is the complete software development environment and the complete set of instructions which generated the figures..”

- Buckheit & Donoho, 1995

Jon Claerbout

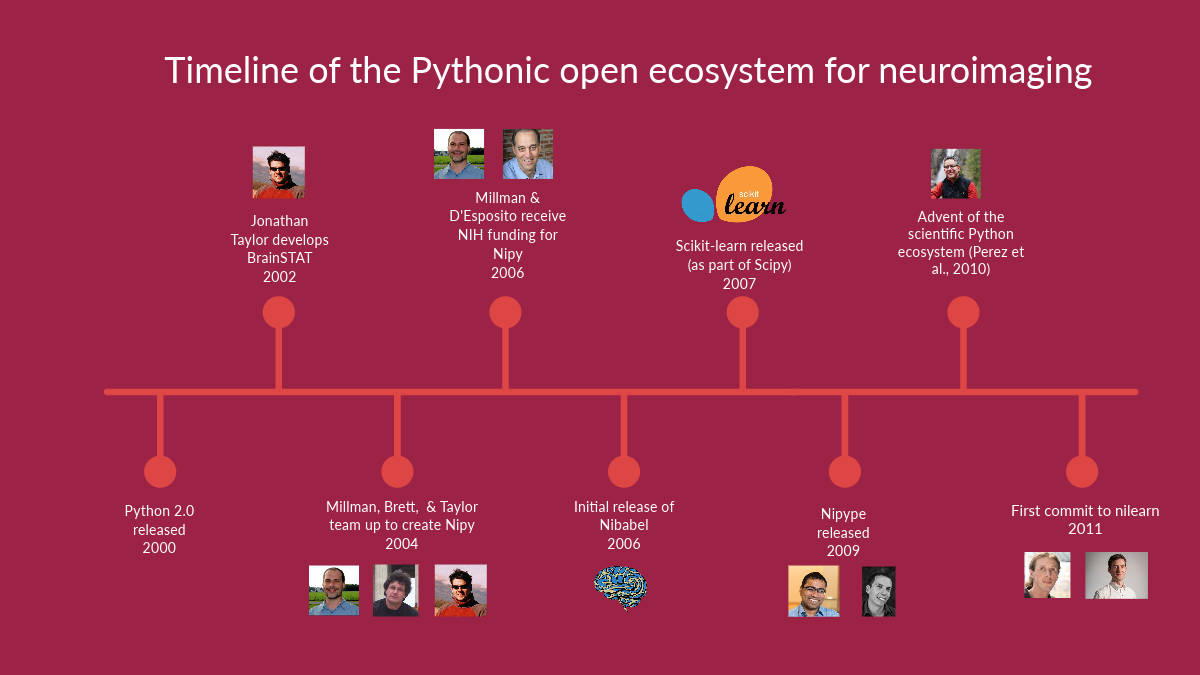

Seeds of openness: Open source software

- All of the major neuroimaging analysis packages are open source

- SPM (1991)

- Enabled my first open source contribution: the SPM ROI toolbox (2000)

- AFNI (1994)

- Freesurfer (1999)

- FSL (2000)

- SPM (1991)

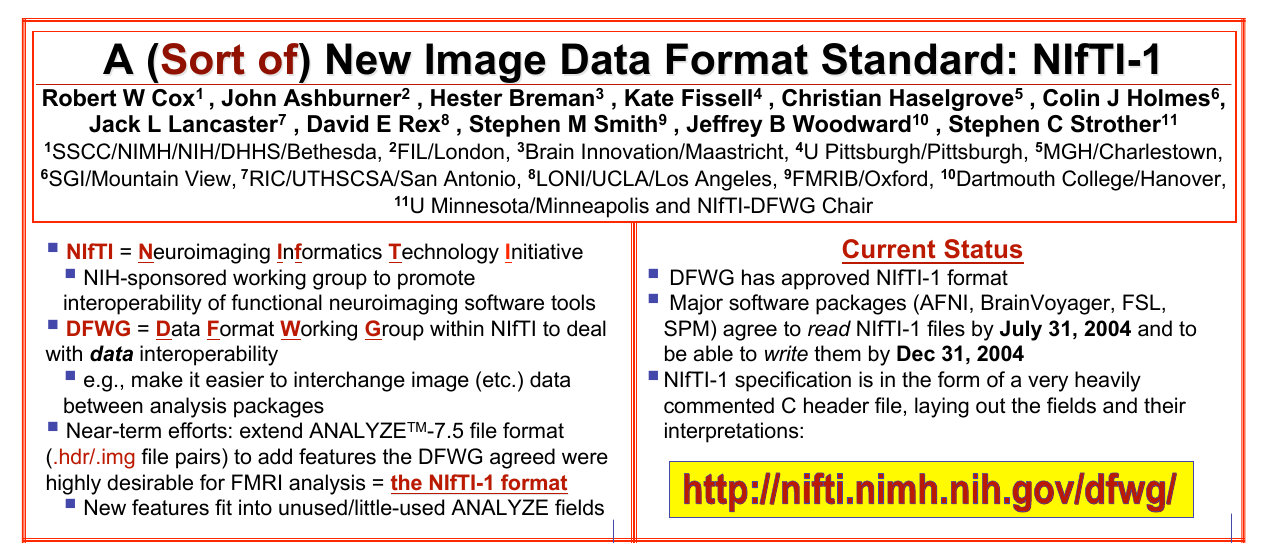

Seeds of openness: A common file format

Cox et al., OHBM, 2004

Seeds of openness: fMRI data sharing

A false start for fMRI data sharing

This letter comes from a group of scientists who are publishing papers using fMRI to understand the links between brain and behavior. We are writing in reaction the recent announcement of the creation of the National fMRI Data Center (www.fmridc.org). In the letter announcing the creation of the center, it was also implied that leading journals in our field may require authors of all fMRI related papers accepted for publication to submit all experimental data pertaining to their paper to the Data Center. … We are particularly concerned with any journal’s decision to require all authors of all fMRI related papers accepted for publication to submit all experimental data pertaining to their paper to the Data Center.

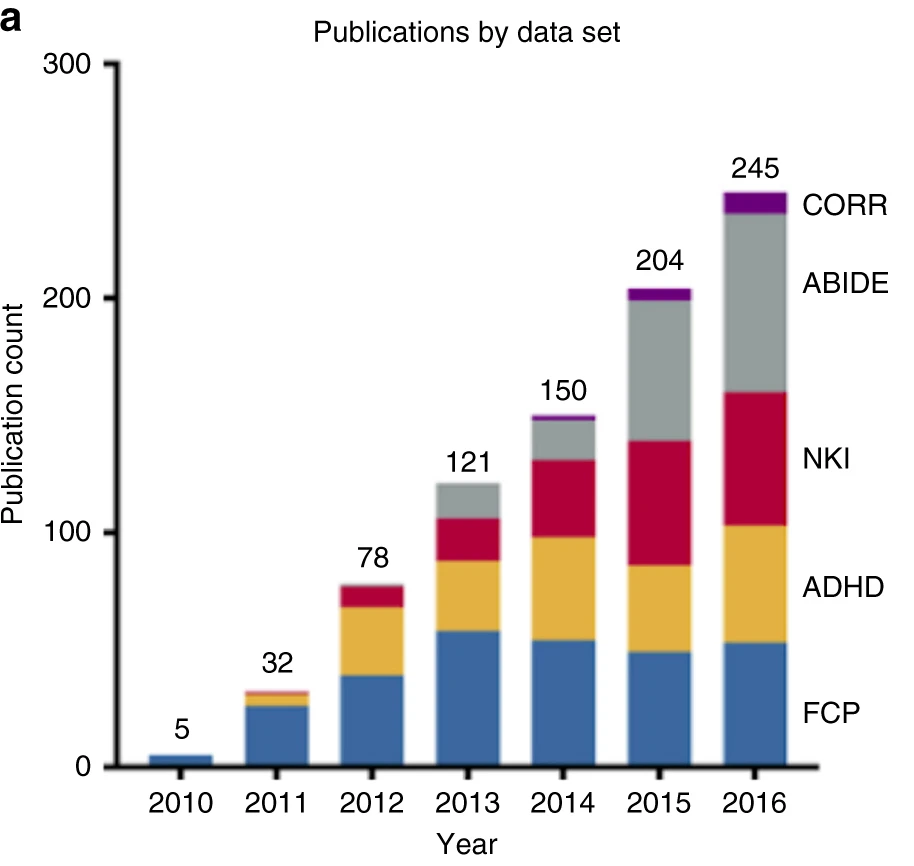

2010: The year data sharing broke in neuroimaging

- “Comprehensive mapping of the functional connectome, and its subsequent exploitation to discern geneticinfluences and brain–behavior relationships, will require multicenter collaborative datasets. Here we initiate this endeavor by gathering R-fMRI data from 1,414 volunteers collected independently at 35 international centers. We demonstrate a universal architecture of positive and negative functional connections, as well as consistent loci of inter-individual variability. …”

Data sharing is becoming the norm in neuroimaging

Poldrack et al., Annual Reviews in Biomedical Data Science, 2019

Milham et al., Nature Communications, 2018

Anonymous senior researcher circa 2019:

“OHBM has been taken over by the open science zealots!”

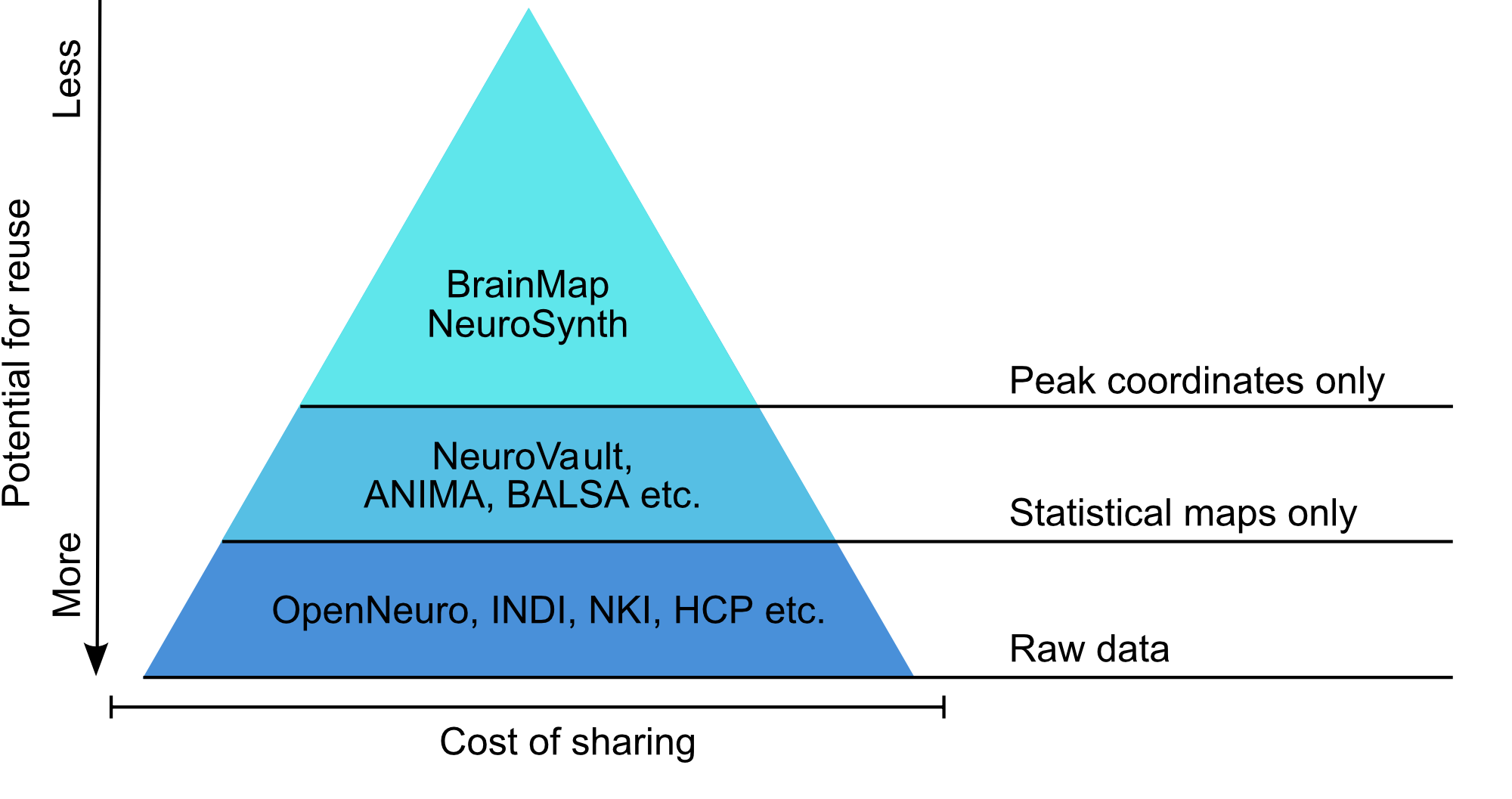

An open ecosystem for retrospective data sharing

- Neurosynth.org: Open database of published neuroimaging coordinates

- Neurovault.org: Open archive for neuroimaging results

- OpenNeuro.org: Open archive for raw/processed neuroimaging data

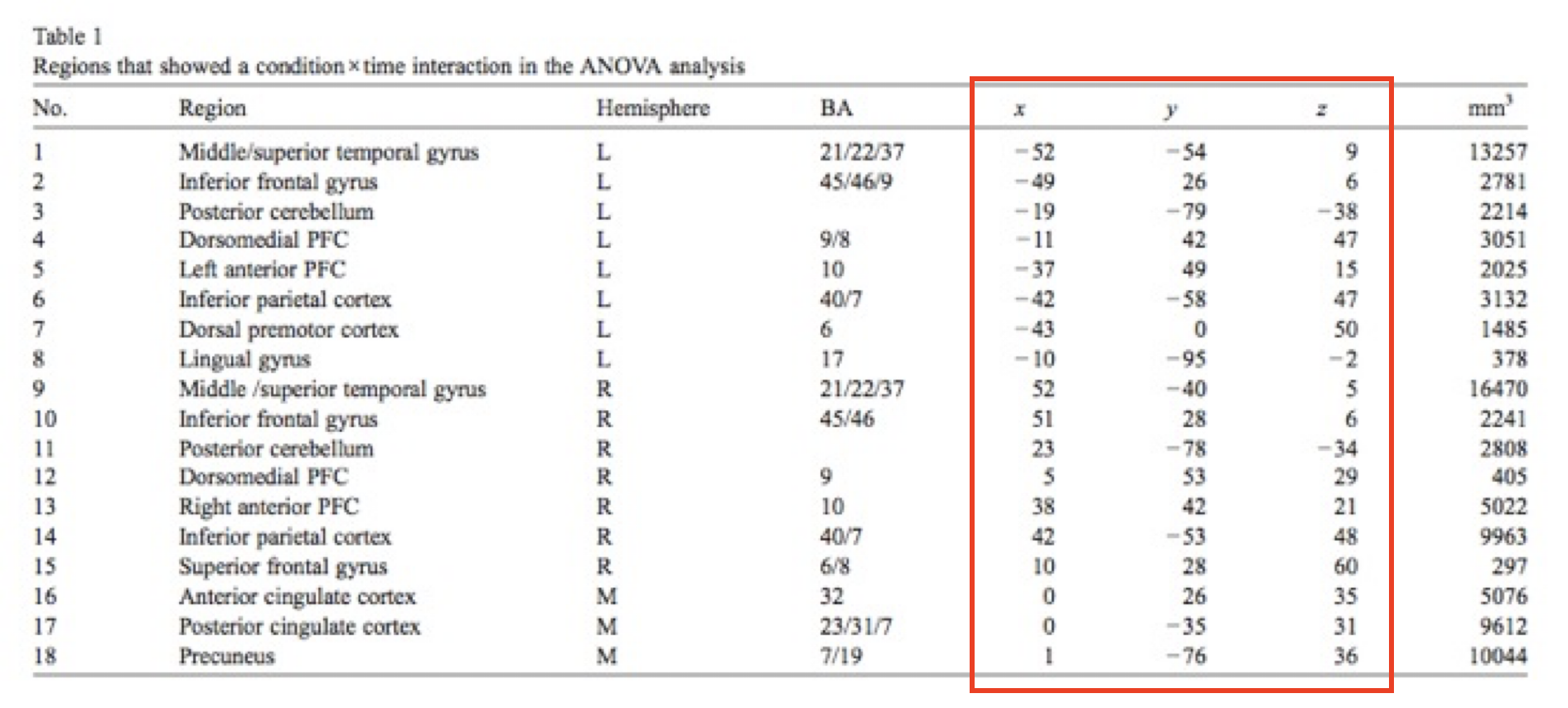

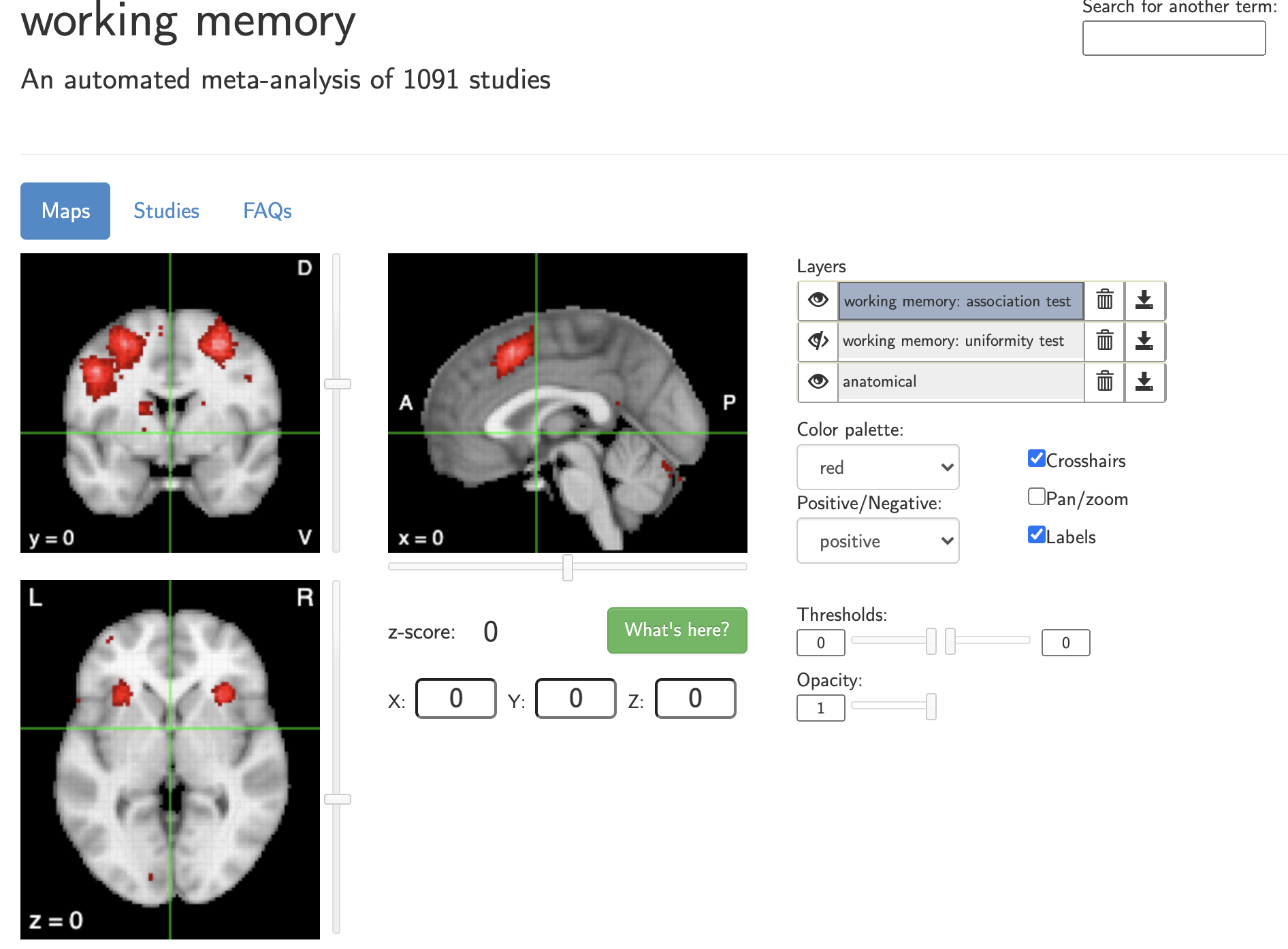

Neurosynth: Sharing activation coordinates

- Brain activity is reported in a (somewhat) standardized coordinate system

- Neurosynth uses publised coordinates to create meta-analytic maps relating terms (from abstract) to activation

Yarkoni et al, 2011, Nature Methods

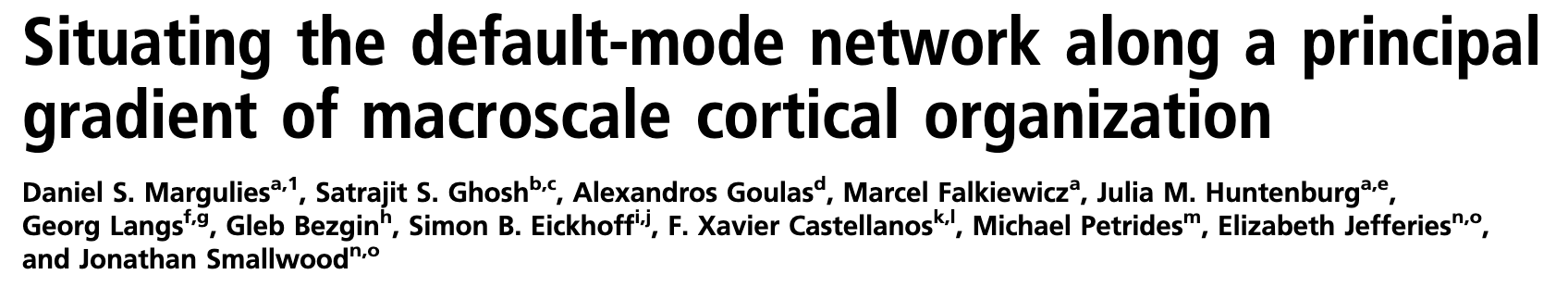

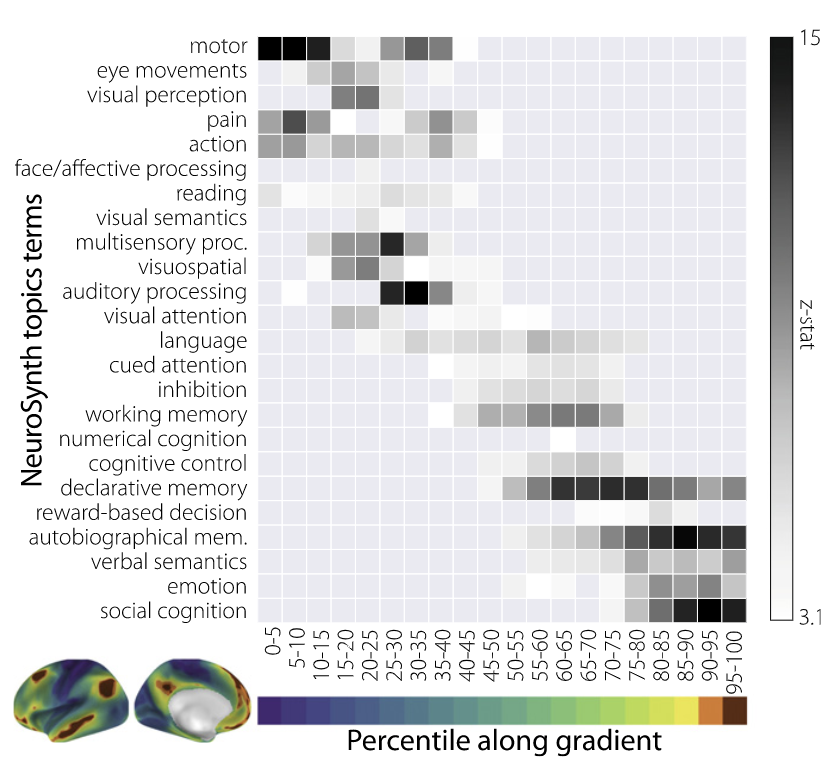

Example of Neurosynth usage

- Identified gradients of functional organization across the cortex

- Used Neurosynth to identify the most common terms associated with each gradient

Neurovault: Sharing neuroimaging results

- The results of most neuroimaging studies are images with statistical estimates at each voxel

- Neurovault.org is an open archive for these results

- Each image receives a persistent identifier for sharing

Gorgolewski et al., 2015, Frontiers in Neuroinformatics

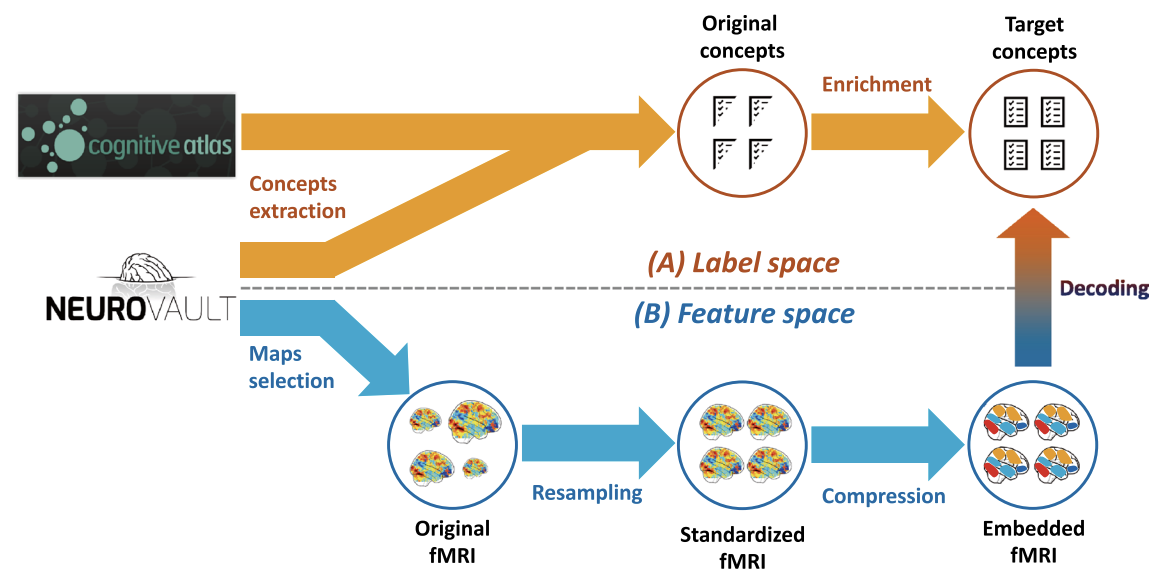

Example of Neurovault usage

OpenNeuro: Sharing raw and processed neuroimaging data

Markiewicz et al, 2021, eLife

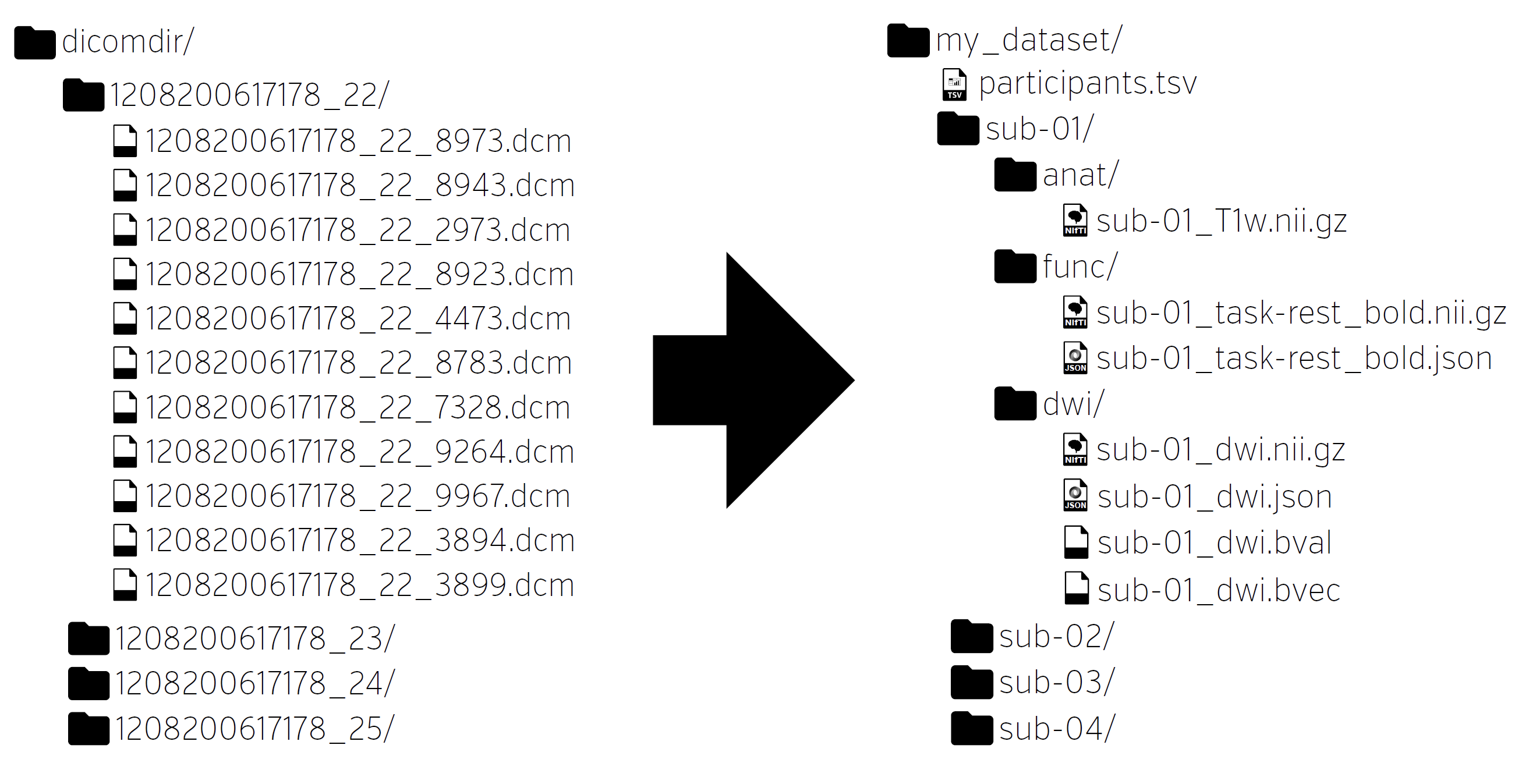

It’s easy to share data badly

Data Sharing and Management Snafu in 3 Short Acts

- I received the data, but when I opened it up it was in hexadecimal

- Yes, that is right

- I cannot read hexadecimal

- You asked for my data and I gave it to you. I have done what you asked.

…

- Is there a guide to the data anywhere?

- Yes, of course, it is the article that is published in Science.

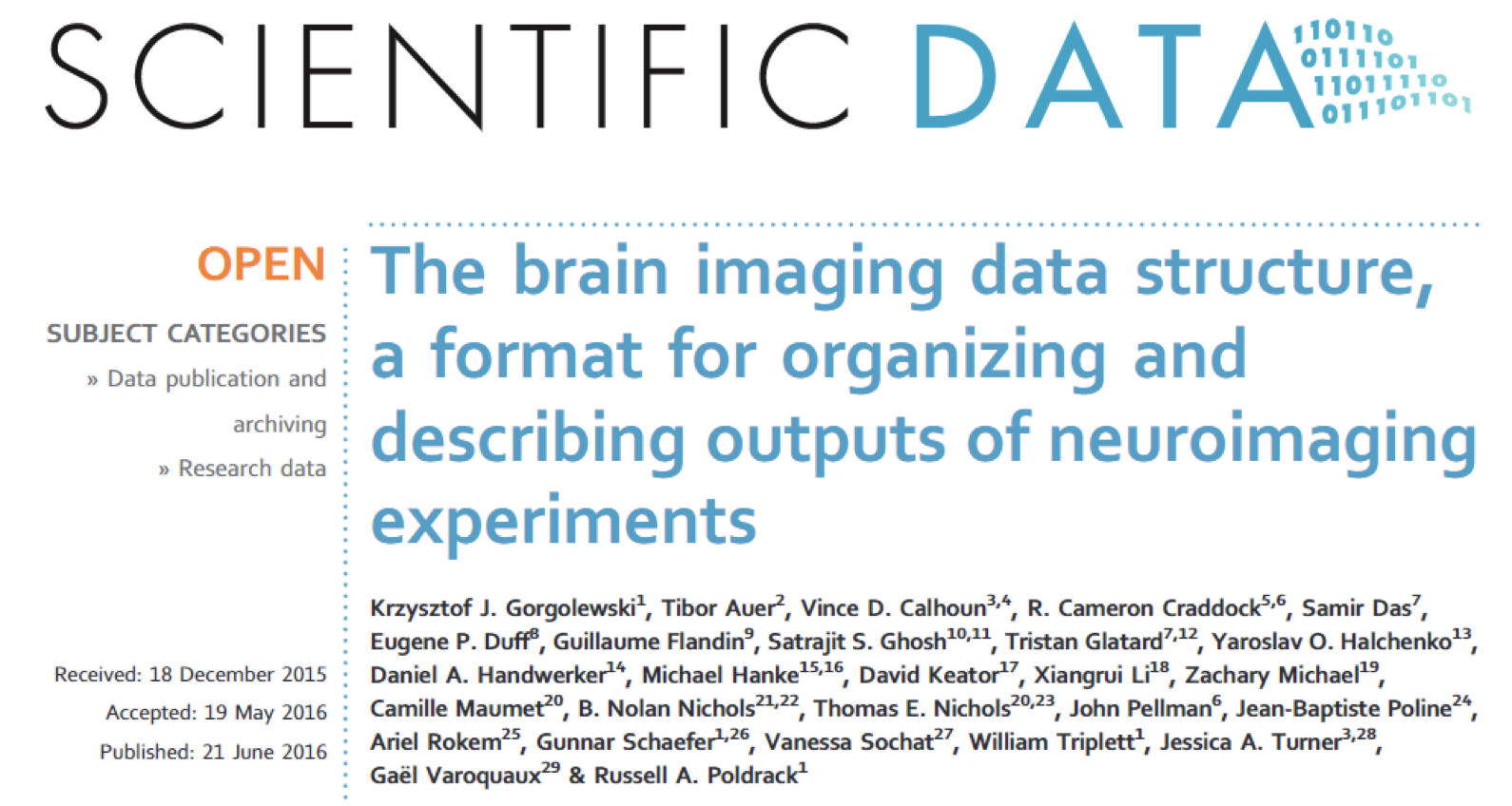

Brain Imaging Data Structure (BIDS)

- A community-based open standard for neuroimaging data

- A file organization standard

- A metadata standard

- Available for MRI, PET, EEG, iEEG, fNIRS

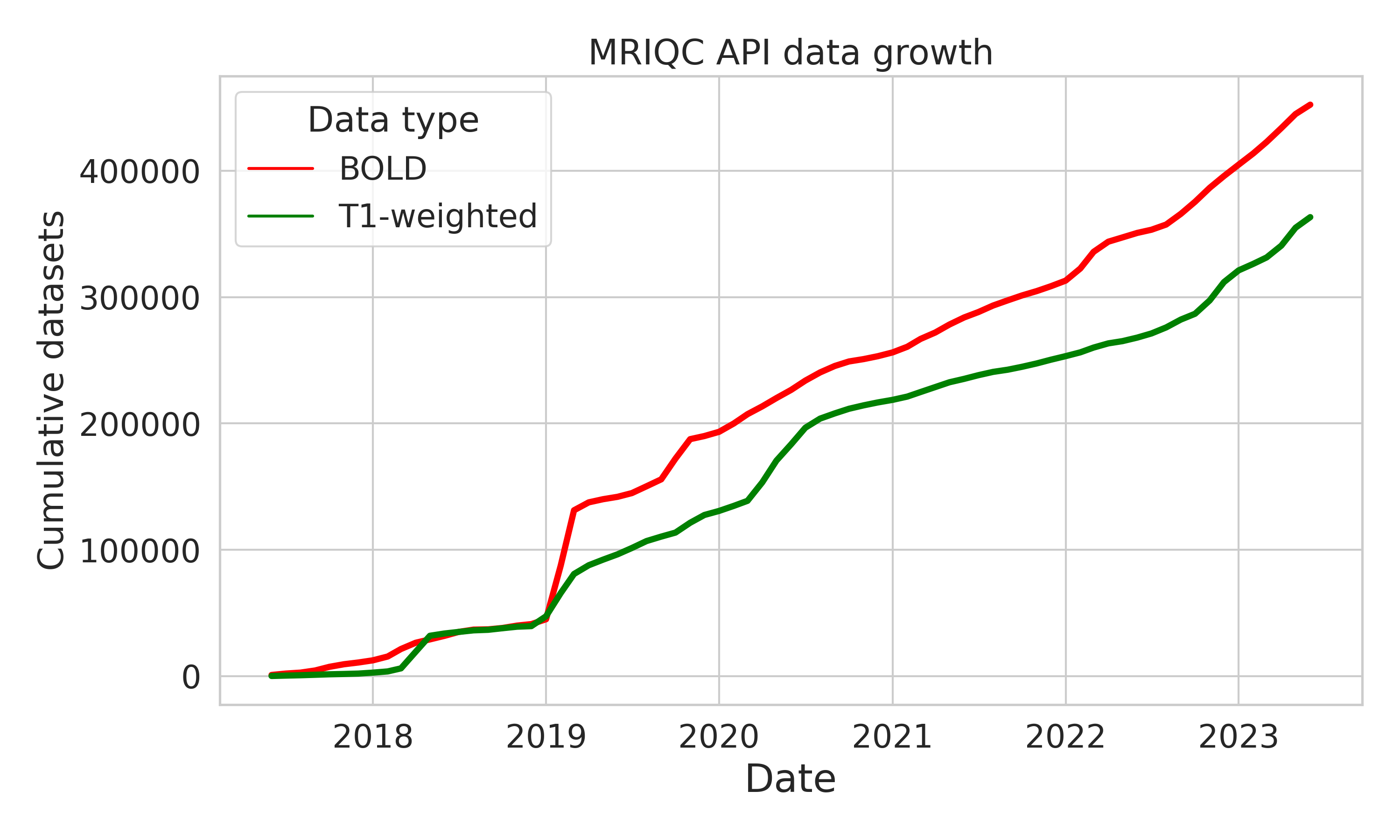

The growing usage of BIDS: An example

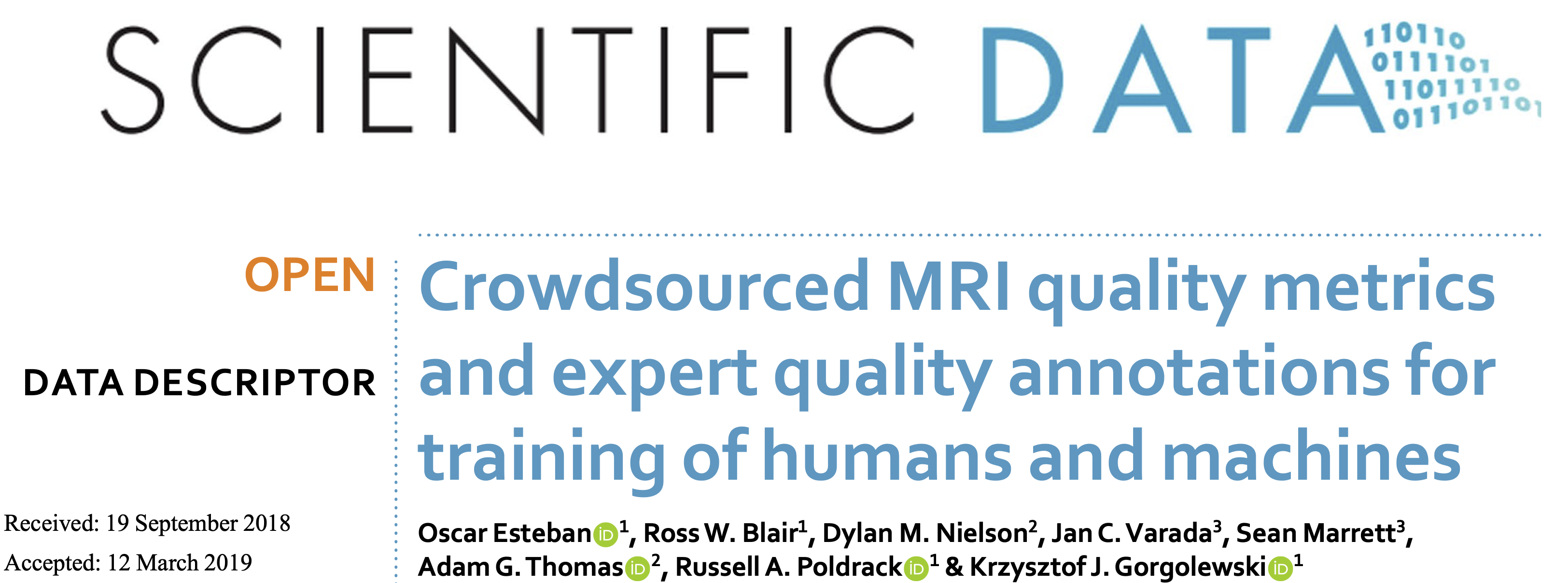

- MRIQC Web API

- Crowdsourced database of MR QC metrics

- QC metrics from ~450K unique BOLD scans and ~350K T1w scans as of June 2023

- Publicly available: https://mriqc.nimh.nih.gov/

BIDS has enabled consistent growth of OpenNeuro

updated from Markiewicz et al, 2021, eLife

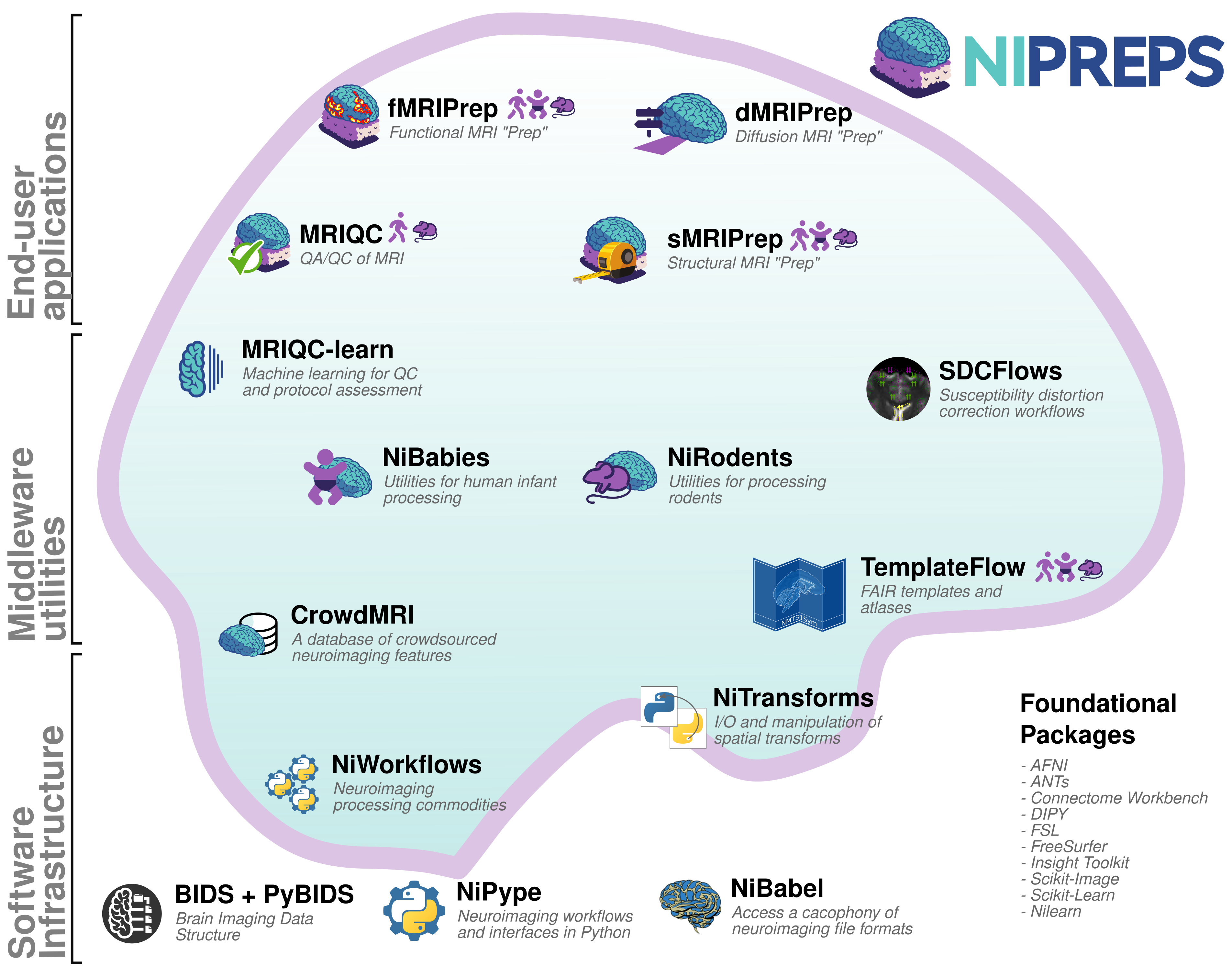

An open-source Pythonic software ecosystem

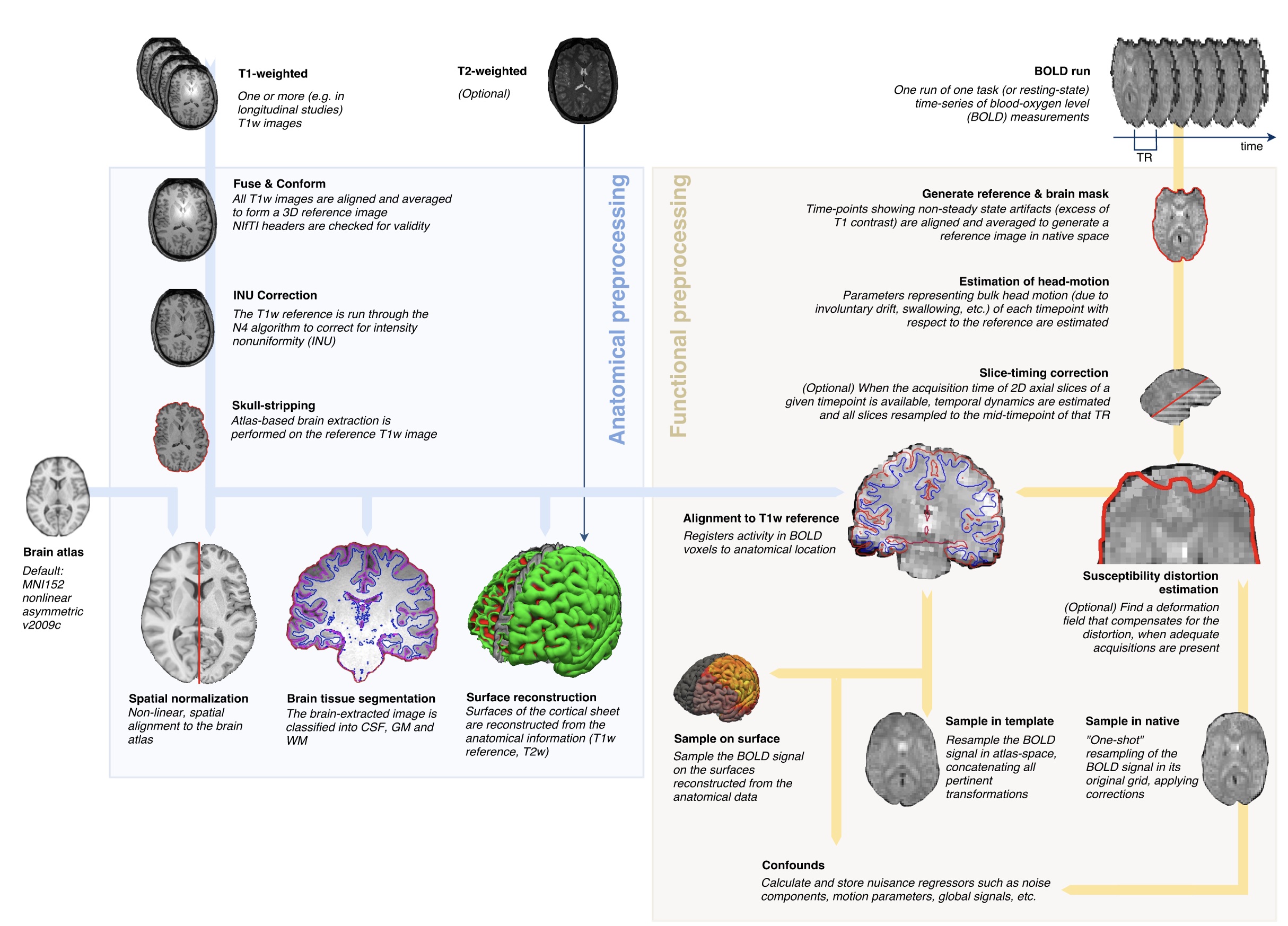

fMRIprep: Robust preprocessing of fMRI data

fmriprep.org; Esteban et al, 2019

MRIQC: MRI quality control for BIDS data

mriqc.org; Esteban et al, 2017

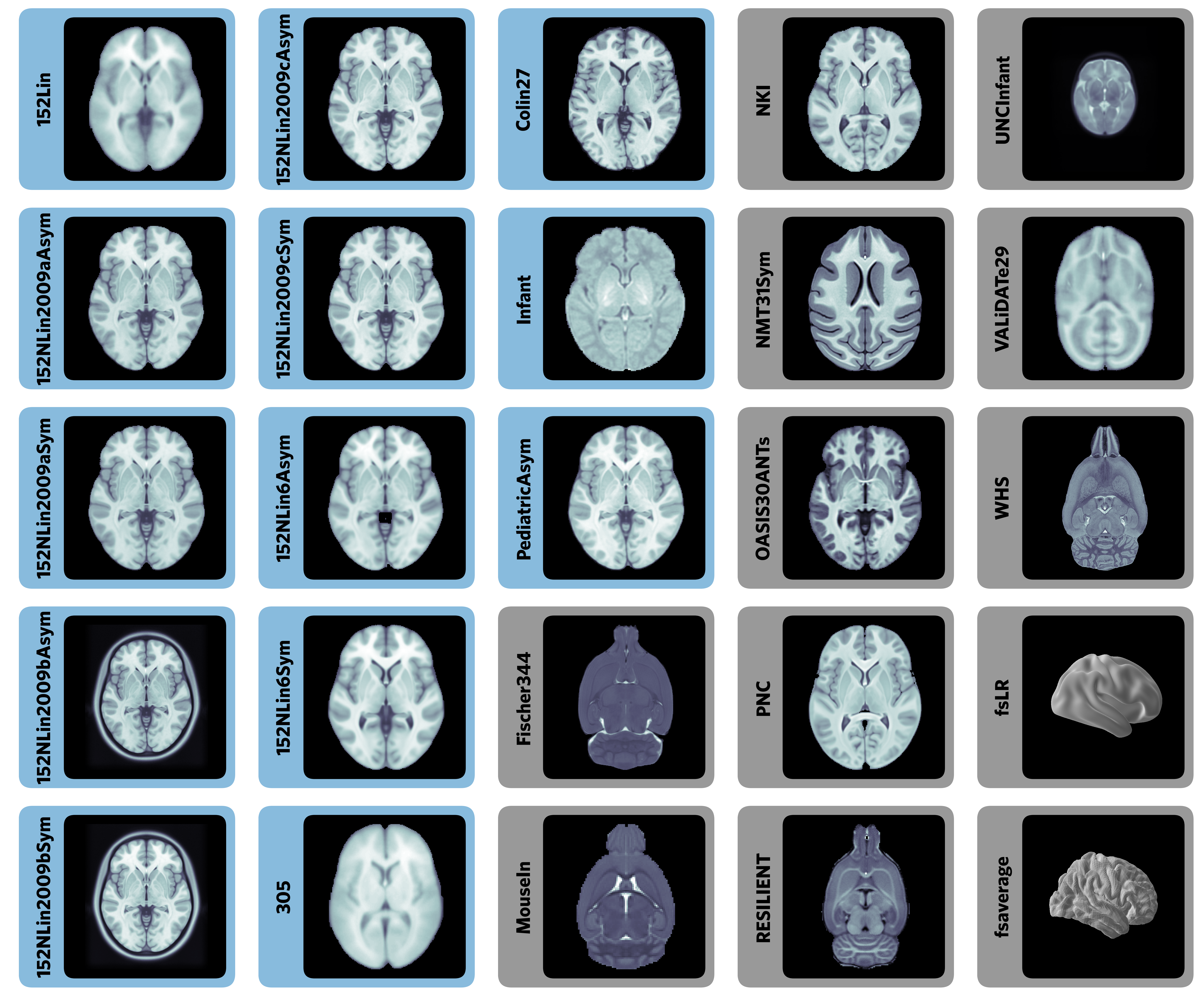

TemplateFlow: FAIR sharing of neuroimaging templates and atlases

Templates and atlases are commonly used in neuroimaging research

There is significant lack of clarity in the use of these templates

- There are numerous versions of the widely used “MNI template”

Templateflow provides programmatic access to a database of templates and mappings between them

Easy to use for humans and machines:

Ciric et al., 2022, Nature Methods

Ciric et al., 2022, Nature Methods

Threats to open neuroscience

- Open science has gained a strong foothold in neuroimaging

- But there are a number of threats to its success

Performative open science

- Data are often shared badly

- Of papers in Psychological Science with an open data badge, only 76% had usable data (Kidwell et al, 2016)

- Reported statistics were only reproducible without involvement of the original authors in 36% of papers (Hardwicke et al, 2021)

- Shared analysis code is rarely usable without substantial modification

- Common problems including missing files and hardcoded paths

- Goodhart’s law: “When a measure becomes a target, it ceases to be a good measure.”

Incentives for open science

- Current incentive structures incentivize irreproducible practices

- Publication in high-profile journals

- Data hoarding

- In the US, a large group of universities is working together to change this

- Higher Education Leadership Initiative for Open Scholarship (HELIOS)

- Working to reform hiring, tenure, and promotion practices to reflect the importance of open scholarship

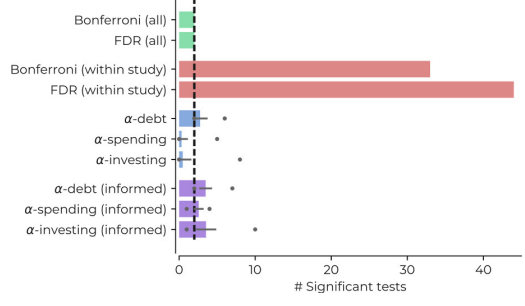

Dataset decay

Dataset decay example

- 182 behavioral measures and 68 cortical thickness measures (12,376 total tests)

- Correcting for all tests simultaneously controls false positive/discovery rate

- If each behavioral measure was tested by a different researcher in a different paper (correcting only for the 68 thickness measures), then the false positive rate is highly inflated

Thompson et al, 2020

AI: Negative impacts

- Used recklessly, machine learning methods can easily go wrong

“We surveyed a variety of research that uses ML and found that data leakage affects at least 294 studies across 17 fields, leading to overoptimistic findings.”

Checklist at https://reforms.cs.princeton.edu/

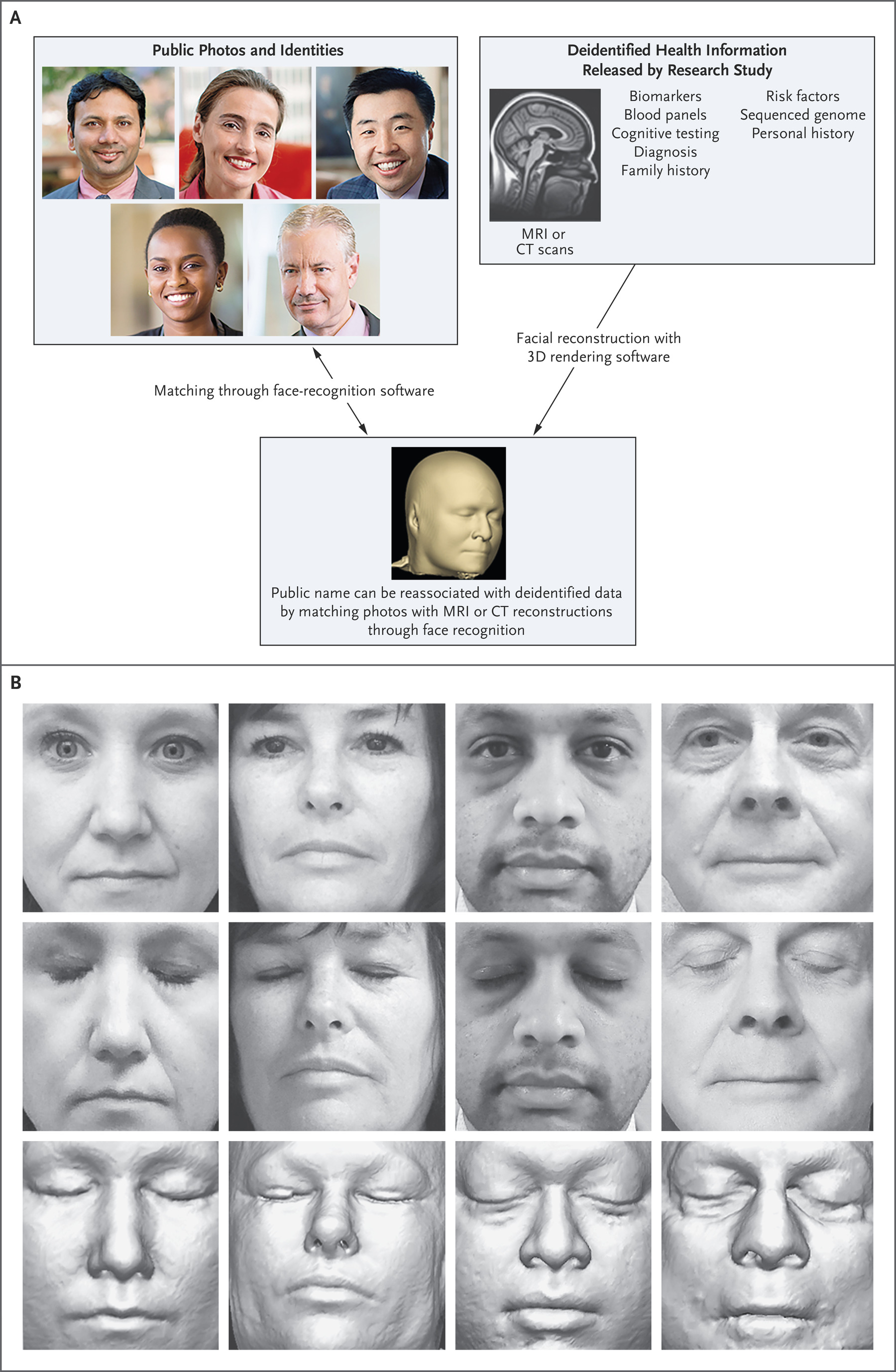

AI: Negative impacts

- AI face recognition models could defeat current deidentification methods

- “For 70 of the 84 participants (83%), the software chose the correct MRI scan as the most likely match for their photos. The correct MRI scan was among the top five choices for 80 of 84 participants (95%).” (Schwartz et al., 2019)

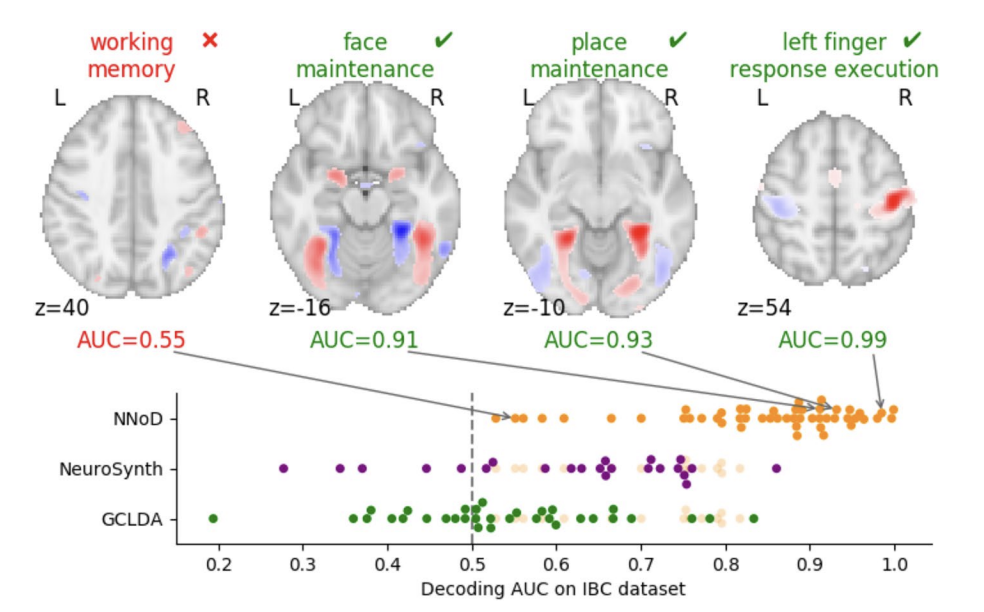

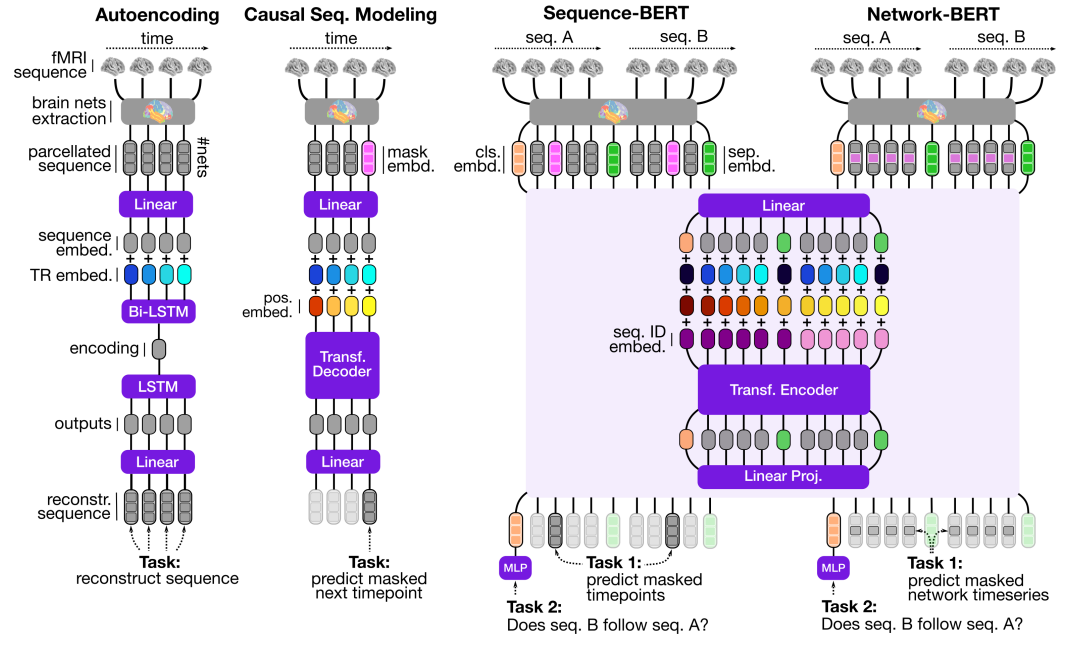

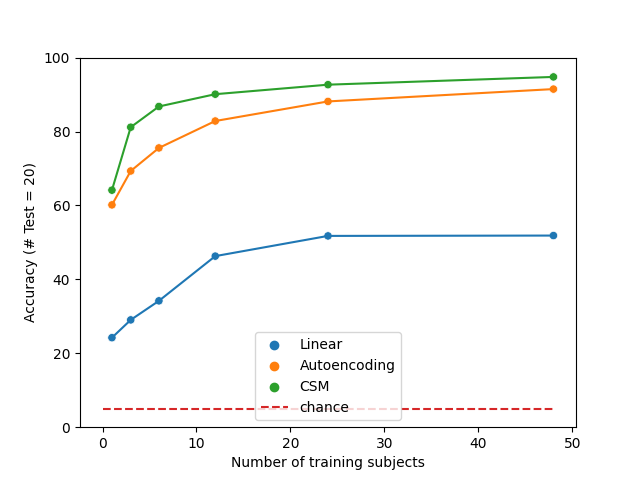

AI: Positive impacts

Foundation models can improve decoding from small samples

Thomas et al., NeurIPS 2022

Conclusions

- The field of neuroimaging endured a number of crises that inspired a credibility revolution

- An impressive Pythonic open source ecosystem has emerged in our field

- We need to be aware of both opportunities and threats to the future of open neuroscience

http://poldrack.github.io/talks-FutureOpenNeuroscience